- Introduction

- Getting Started

- Gitpod Tutorial

- Use Cases

- Languages

- Configure

- Workspaces

- User settings

- Repositories

- Organizations

- Authentication

- Billing

- References

- .gitpod.yml

- IDEs & editors

- Integrations

- Gitpod CLI

- Gitpod API

- Gitpod URL

- Compatibility

- Enterprise

- Overview

- Setup and Preparation

- Deploying

- Configure your Gitpod Instance

- Administration

- Upgrading

- Background

- Reference

- Archive

- Help

- Contribute

- Troubleshooting

Getting Access to the Instance for Debugging

In some situations, a Gitpod employee may reach out and ask the customer to access their AWS account to help debug the instance. Find out more about the permissions used in the role assumed via AWS IAM permission requirements. Gitpod itself cannot access the AWS account of the instance nor assume the role used below - this needs to be done by a user in the account of the customer.

In order to help debug, a Gitpod employee will ask to perform the following steps. Upon completion, the user will have access to the Kubernetes cluster(s) used to run Gitpod:

Sign in to AWS account of the Gitpod instance

Connect to instance:

- Go to EC2

- Find an instance in the appropriate cluster, e.g. one of the

workspace-*ormeta-*instances. - Click Actions -> Connect -> Session Manager -> Connect

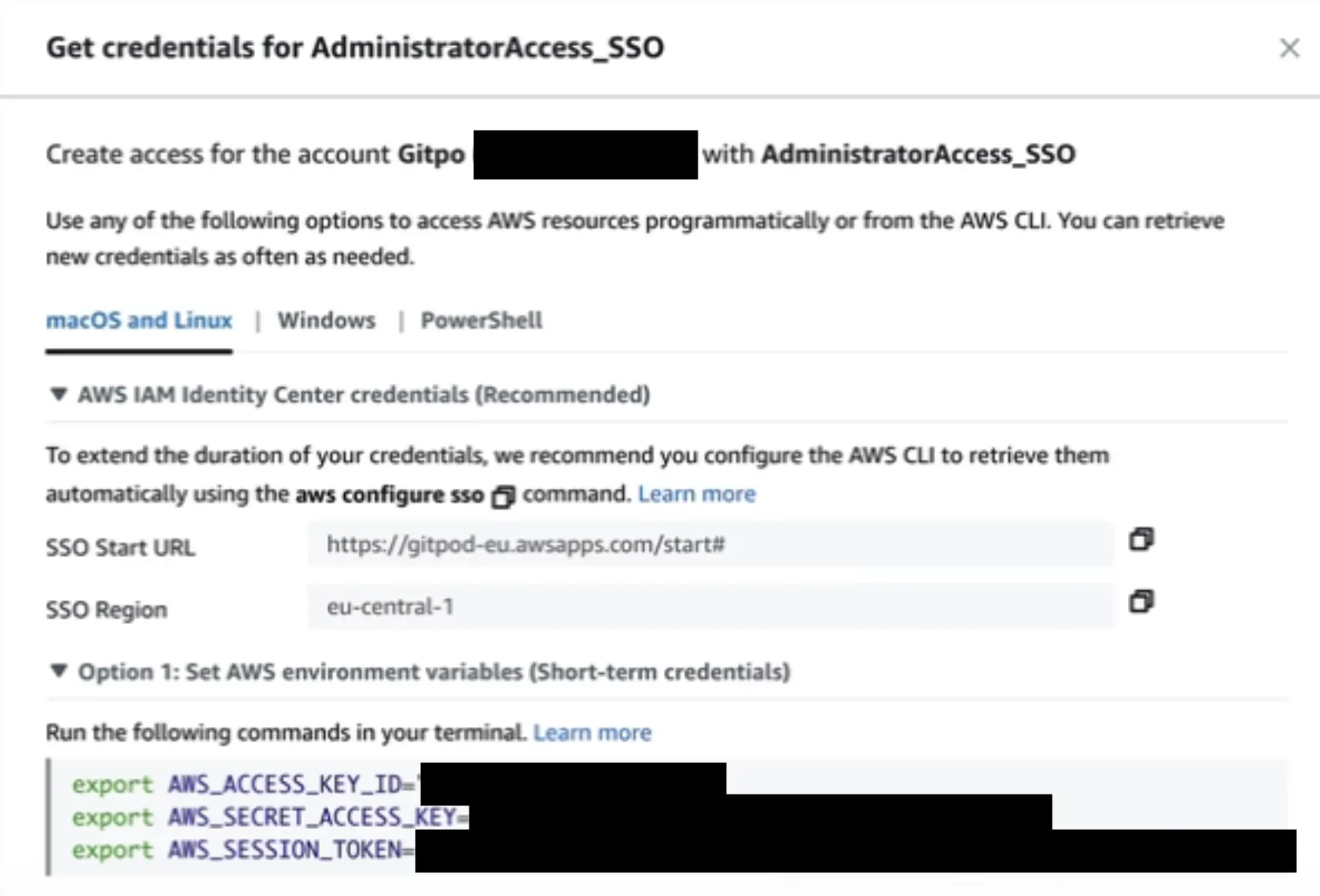

Configure AWS CLI, i.e. set

AWS_*environment variables manually- From the AWS console, create credentials programatically for your current user account and then set these as environment variables:

- From the AWS console, create credentials programatically for your current user account and then set these as environment variables:

Configure

kubectlaccessbash

export GITPOD_INSTANCE_AWS_ACCOUNT_ID="ID of the AWS account where Gitpod runs in" export GITPOD_INSTANCE_NAME="name of the instance, usually the prefix in the url" export CLUSTER_NAME="workspace" # or meta, depending on the cluster being accessed aws eks update-kubeconfig --name "${CLUSTER_NAME}" --role arn:aws:iam::${GITPOD_INSTANCE_AWS_ACCOUNT_ID}:role/gitpod-customer-debug-access-role-${GITPOD_INSTANCE_NAME}You should now have

kubectlaccess, to verify runkubectl get podsTroubleshooting

- Sometimes, you will get an auth error. Often this is due to mistyped input in step 4 above. For example, using the wrong quotation mark (

”instead of") will cause an auth failure.

- Sometimes, you will get an auth error. Often this is due to mistyped input in step 4 above. For example, using the wrong quotation mark (