Kubernetes Developer Experience Patterns: Local vs Remote

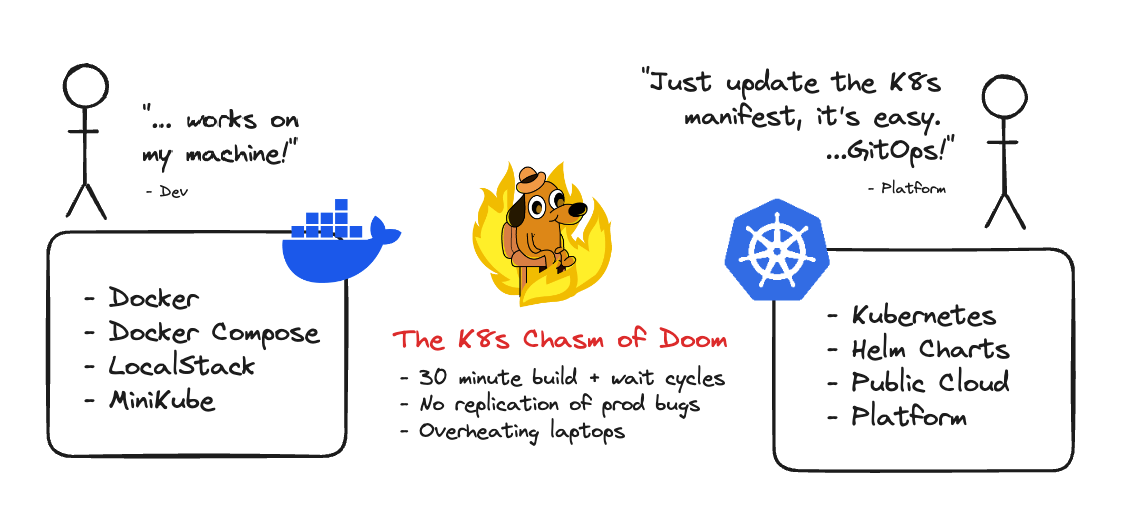

Kubernetes is optimized as a tool for production. Kubernetes is highly optimized for production scaling, load balancing, and networking, typically in a production environment with access to significant compute resources which are often not replicateable locally. And because Kubernetes is built as a tool for production, building developer experiences for Kubernetes can be challenging.

Kubernetes is not strongly opinionated about the developer experience to create services which later run on Kubernetes. Without close attention to your developer experience, it’s easy to end up in a situation where you can no longer replicate production bugs, your developer laptops cannot run all of your microservices, and you’re resorting to building containers and pushing to remote clusters, and suffering 30m+ wait times for developers to test changes.

Additionally, replicating Kubernetes locally using Docker and Docker Compose soon drifts from your remote configurations that might be using Helm or some other variation of Kubernetes Manifest files. Now you have two sources of truth, duplication and a recipe for the dreaded “Works on my machine” challenges.

But, luckily, building developer experiences on Kubernetes is possible, provided that you know the right approaches and apply them well.

Would you prefer to “watch” this? We recorded a webinar of this exact blog post. You can find the recording here—otherwise, read on!

Developer Experience Patterns with Kubernetes

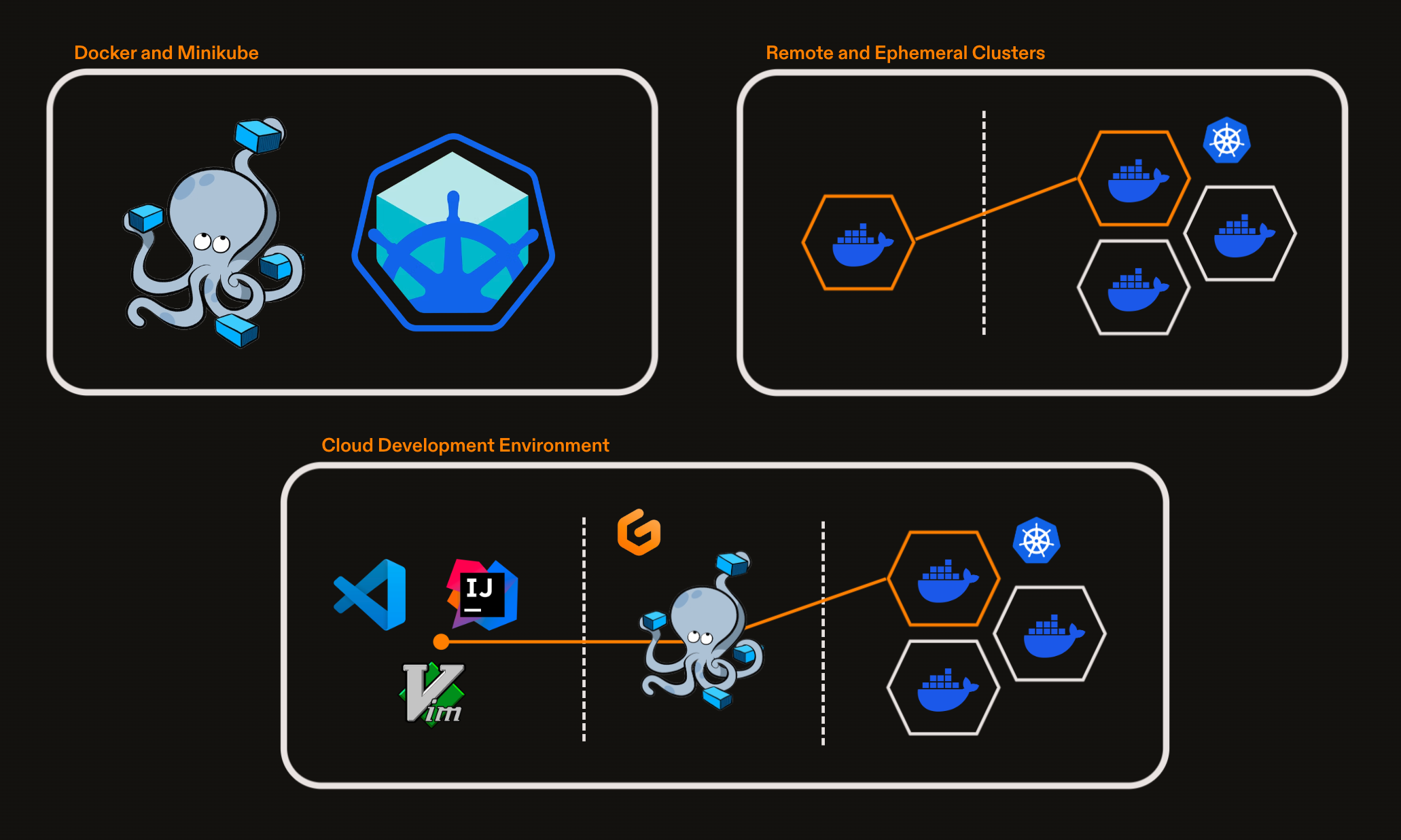

Today, we’re going to talk through some patterns of how you can bridge the gap between your developer experience and your Kubernetes cluster(s):

- Local Container - Using Docker and Docker Compose.

- Local Cluster - Using tools like MiniKube.

- Remote Clusters - File Sync - Syncing local files to a remote cluster.

- Remote Clusters - Remote Proxy - Connect a local service to a cluster.

- Cloud Development Environment - Automating your dev environments.

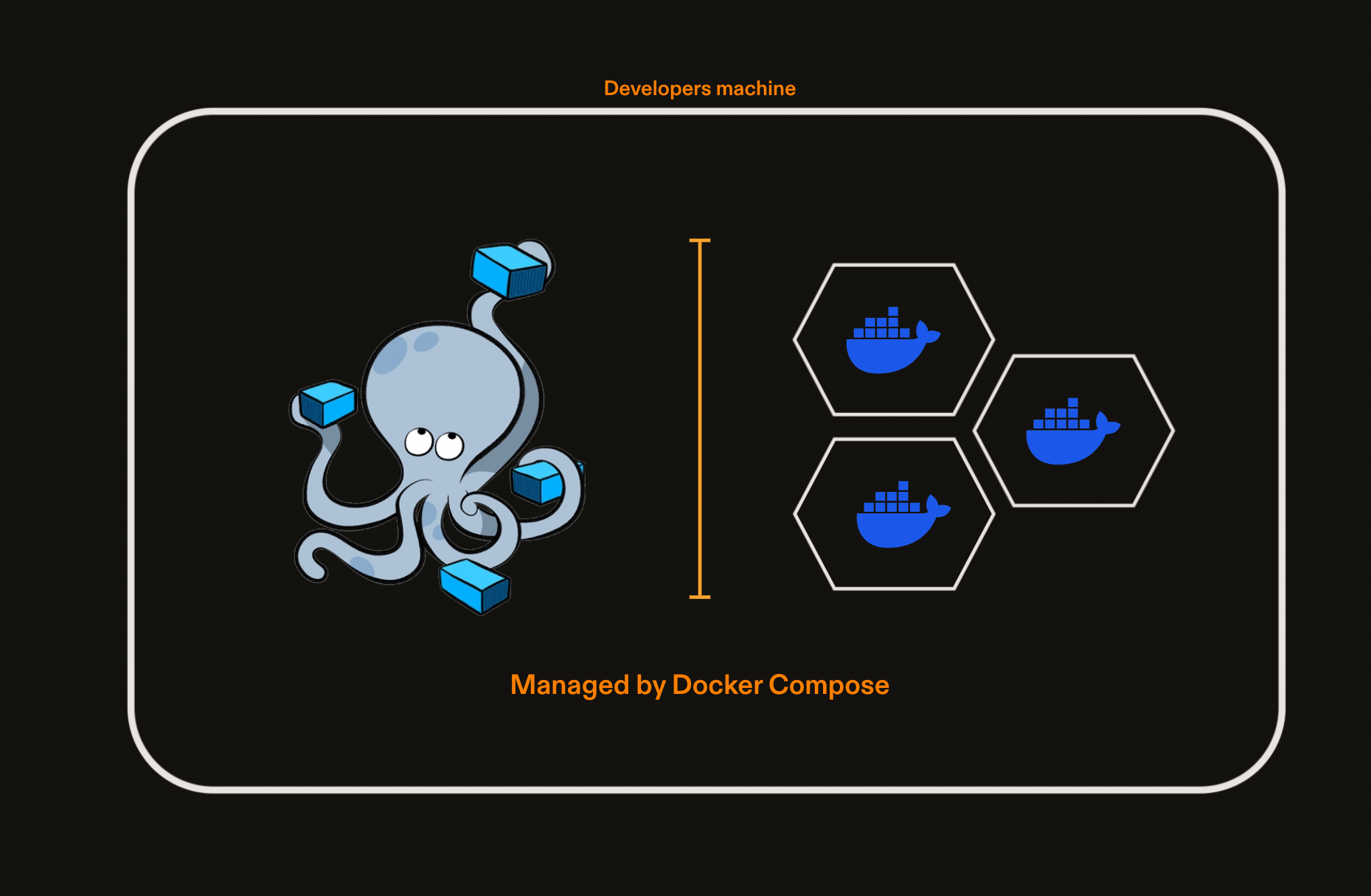

Local container(s) - Using Docker and Docker Compose

The first pattern we should discuss is regular Docker containers. Working on a single container is a core, and straight-forward use case of Docker, especially in a microservice context. We can establish contracts between our microservices and stub out any remote services to create fast feedback loops for developers. Either we run our application outside of the container, and package it in Docker only to test it. Or, we mount a volume inside of Docker to edit and test code directly inside of a running container. There are many supporting tools you can use for Docker locally including: Docker Desktop, Rancher Desktop, Orbstack that give you an interface and other tools to work with Docker.

However, one core challenge of using Docker or Docker Compose as your configuration is the configuration drift challenges (to learn more about configuration drift challenges with Kubernetes, check out our blog k8s chasm of doom). You will need to ensure local configurations for Docker, or Docker Compose are kept up-to-date with your Kubernetes configurations. It’s easy here to slip into the habit of testing with Kubernetes using the “outer loop” (e.g. building an image, pushing and waiting for it to deploy) and not through the “inner loop” (testing your changes on your machine).

Benefits

- Fast developer feedback loops when developing applications locally.

- No need to wait for long continuous integration to deploy and test changes.

- Developers can use and debug using the tools they’re used to (e.g. Docker).

Limitations

- Development is bound to the resources of the developer machine.

- Docker compose configurations can drift from Kubernetes configurations.

- Dependencies on OS hardware, and licenses for tools like Docker Desktop.

- Maintaing contracts between microservices is challenging.

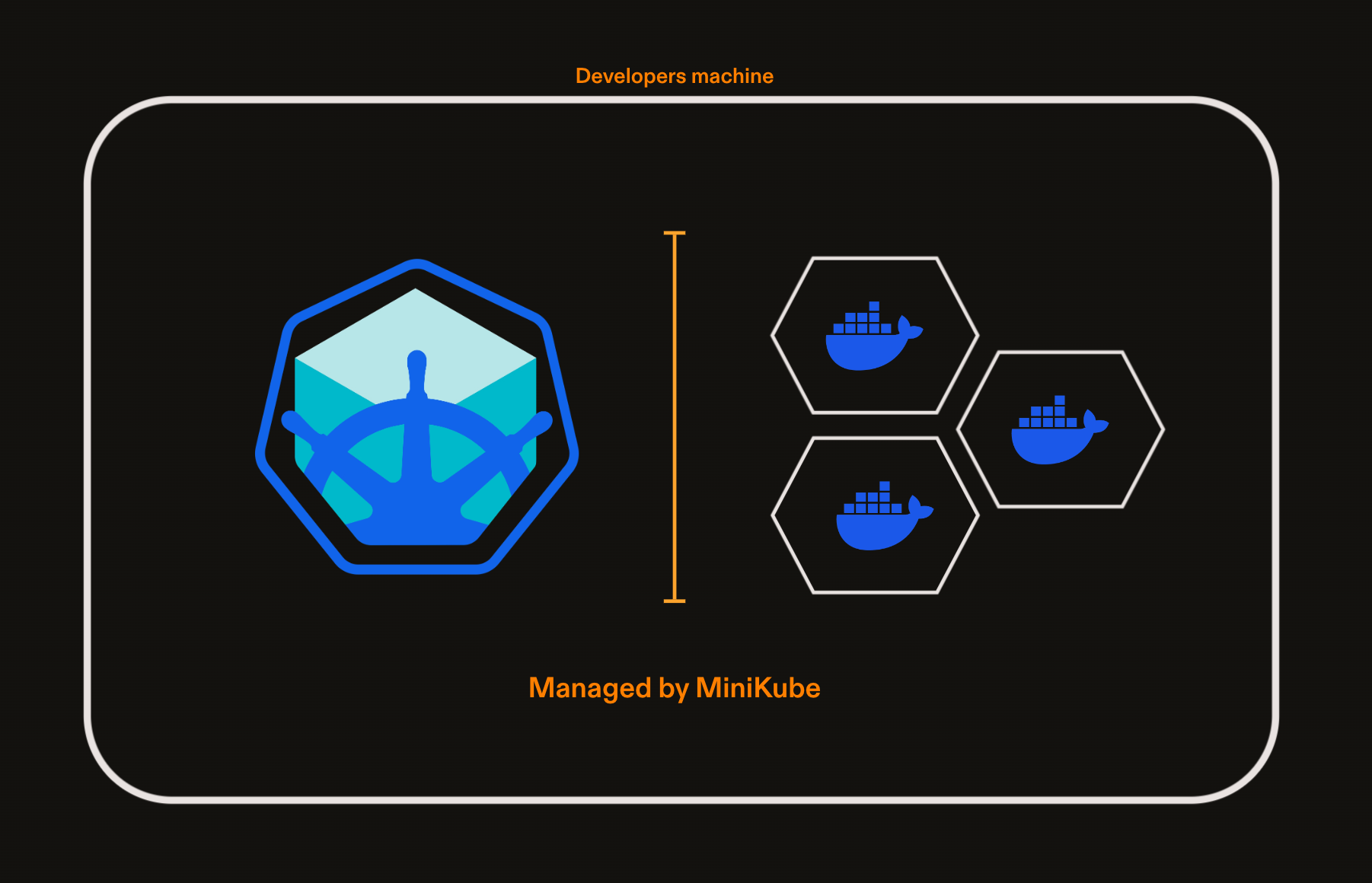

Local Cluster (Using tools like MiniKube)

An option beyond Docker when working on our local machines is to run the entire Kubernetes cluster locally. Here we are using all of our Kubernetes configuration manifest files for local development. The most popular tool for running Kubernetes locally is MiniKube. However, there are other options such as Kind and K3s, kubeadm, Docker Desktop, Gefyra, and MicroK8s. With these tools we need to look at how that they implement virtualisation, to understand their operating systems support, and with a local cluster tool you’re still bound to the hardware of your local machine.

Using a tool like MiniKube essentially “sidesteps” the configuration drift challenges by utilizing the same configuration files as your upstream Kubernetes cluster, except running locally. We can test Kubernetes our deployment strategies, check interactions with mounted volumes, and test manifest file changes inside the cluster on our machine. However, local clusters aren’t a silver bullet for the configuration drift issues, as certain aspects of your Kubernetes cluster, like your load balancer configurations, networking setups, and VPN can be very hard to replicate locally inside of tools like Minikube.

Minikube can help in replicating production closer than docker-compose, but it will never be a perfect representation of production.

Some of these tools are created with the explicit intention of working locally, but others—like K3s—are tools designed for production, that also work locally. If your main challenge is with configuration drift, you have good hardware, and don’t anticipate any operating system compatibility issues, a cluster emulator can mitigate feedback loop challenges. You can also combine a local flow using Docker and/or Docker Compose to validate changes with Minikube.

Benefits

- You can replicate a Kubernetes cluster closer than with pure docker.

- You can leverage developer hardware without incurring extra cloud costs.

- You can debug using Kubernetes native tools like

kubectl.

Limitations

- You are still constrained by the resources of the hardware.

- You may need to consider support for different operating systems.

- Local cluster tools can not fully replicate production.

- Running many services locally can take a lot of time to launch.

- Running many services locally will put a strain on your hardware.

Remote Clusters (Remote Proxy)

The challenge of running remote clusters in Kubernetes, is how the feedback loop for development becomes long. Rather than developers working in their “inner loop”, e.g. making changes before they commit. They’re now forced into the “outer loop” where they need to commit changes, and wait for deployment. Deploying a cluster often takes quite some time, and packaging, building and waiting for a cluster to deploy can be a painful feedback loop, no matter how much you optimize continuous integration and deployment.

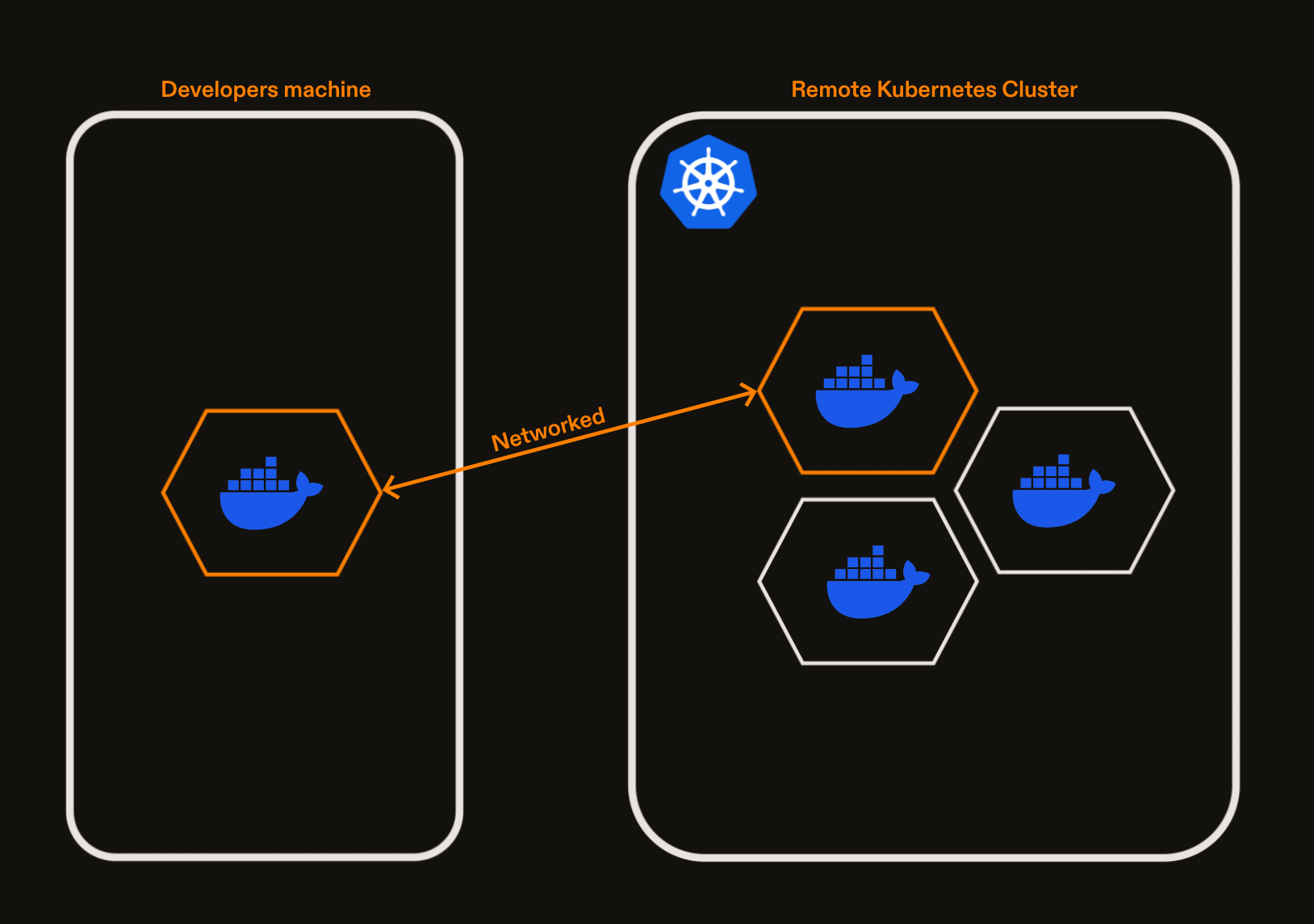

One pattern for overcoming the painfully long feedback loop of remotely developing on a Kubernetes cluster is to use a pattern called “remocal”. You still run your docker container, or service locally, but use a tool like Telepresence to “proxy” requests from within your cluster, to a local machine. The benefit is that you get to keep the “inner loop” of your developer experience, but you’re “teleporting” your local service so that it behaves as if it is running in the cluster.

Whilst Telepresence is one way to achieve this networking proxying pattern, there are other tools, including : MirrorD, Gefrya, Bridge to Kubernetes and Kubefwd. Each tool has differences in their implementation. For instance, some intercept file requests at the process level, some at the container level, and some operate at the machine, or operating system level. Some tools require root access on the local machine, and others do not.

A challenge of this approach is in getting all the tooling setup correctly. If we write good documentation, and ensure our developers understand how to use and configure Telepresence, this pattern can work well. Another piece of this puzzle, is also the infrastructure and automation required to deploy the cluster(s). How do we deploy our clusters or namespaces and when? That is another problem you’ll need to solve with this pattern.

Benefits:

- Aligns on Kubernetes manifests as configuration source-of-truth.

- Keep your inner loop speed, whilst leveraging remote development.

- Can reduces cloud costs by optimizing resource usage

- Ensures consistency in your environment

Limitations:

- Incurs cloud costs for remote services.

- Requires version consistency with the cluster

- Encourages distributed monolith architecture.

- Can be tricky to setup and debug for developers.

Remote Clusters (Sync)

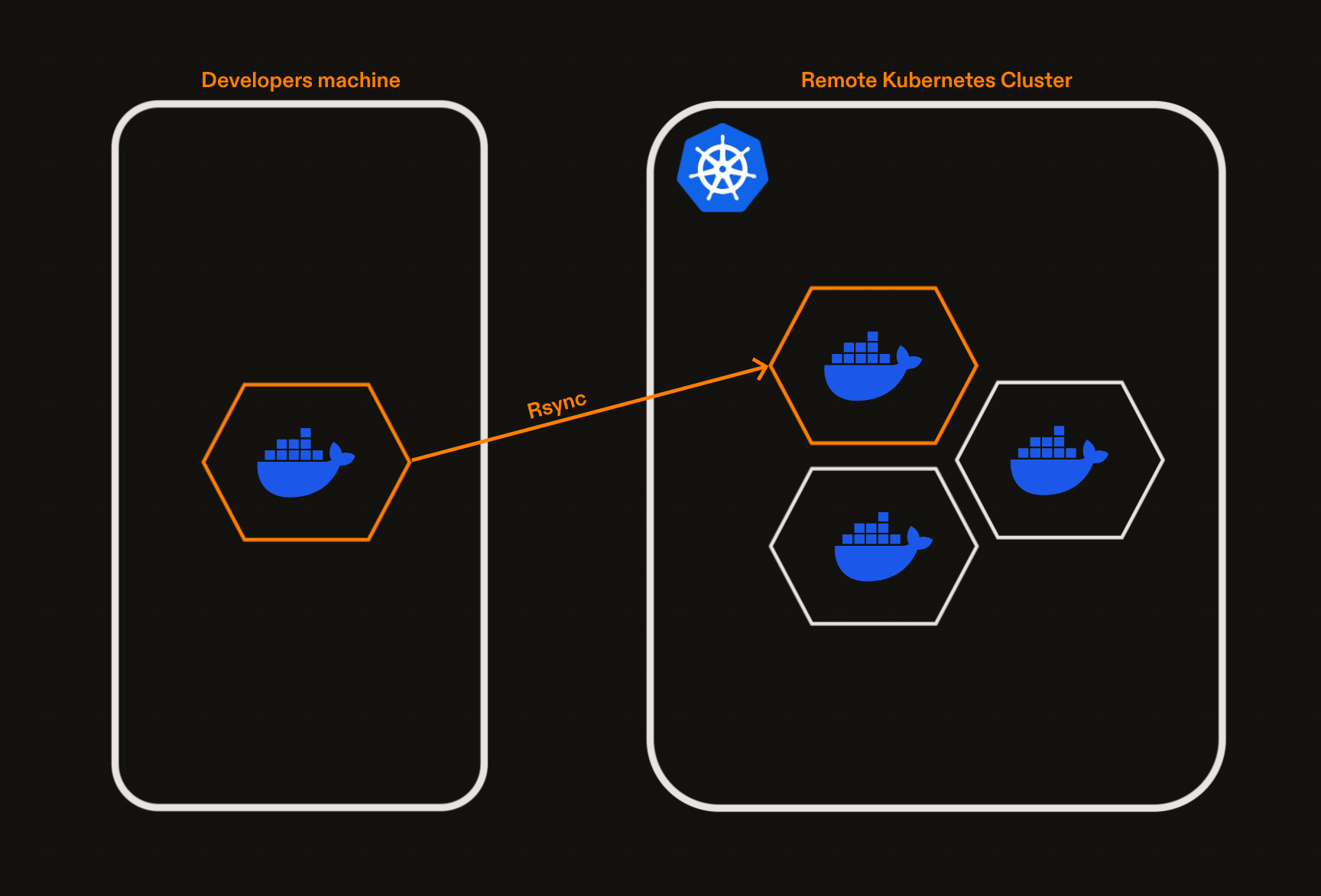

Another option similar to using Telepresence and a network proxy, is to entirely move development to the cloud, and develop “inside a cluster” by using file syncing from your machine to the remote cluster. This pattern keeps the files on your machine, but then syncs them to a remote running Kubernetes cluster. This pattern does not completely remove the issues of Docker Compose, as you’re now running in the cloud, but that can also become very expensive. Some tools that can facilitate this type of pattern are Tilt, Skaffold and DevSpace.

If you’re running your entire cluster for each of your developers, you’re deploying every service, and running them whilst your application is in production. If you share environments with developers, they might step on each other’s work, and end up in odd debugging situations where multiple services are changing and colliding with each other. Remote development on a cluster could also put you on a slippery slope where costs only ever increase, and developers become increasingly unable to use their machines, as less investment goes into contract testing, service isolation, and local testing.

This pattern does also still require developers to maintain their development environment themselves, with code on the developers machine. You’ll might also want to build tools to support in debugging your remote cluster, if things go wrong. With this pattern developers now need to learn Kubernetes terminology, and how to debug a distributed system running in the cloud (or wherever your remote cluster is deployed).

Benefits:

- Aligns on Kubernetes manifests as configuration source-of-truth.

- Development is done in a production-like environment.

- Faster feedback loop for distributed monolith architecture.

Limitations:

- Can incur significant cloud costs.

- Code still exists on the developers machine.

- Developers have to configure secrets, tools, and maintain their environment.

- Pattern can mask a distributed and monolithic architecture.

- Developers might have to debug remotely using cloud tools, etc.

Cloud Development Environments

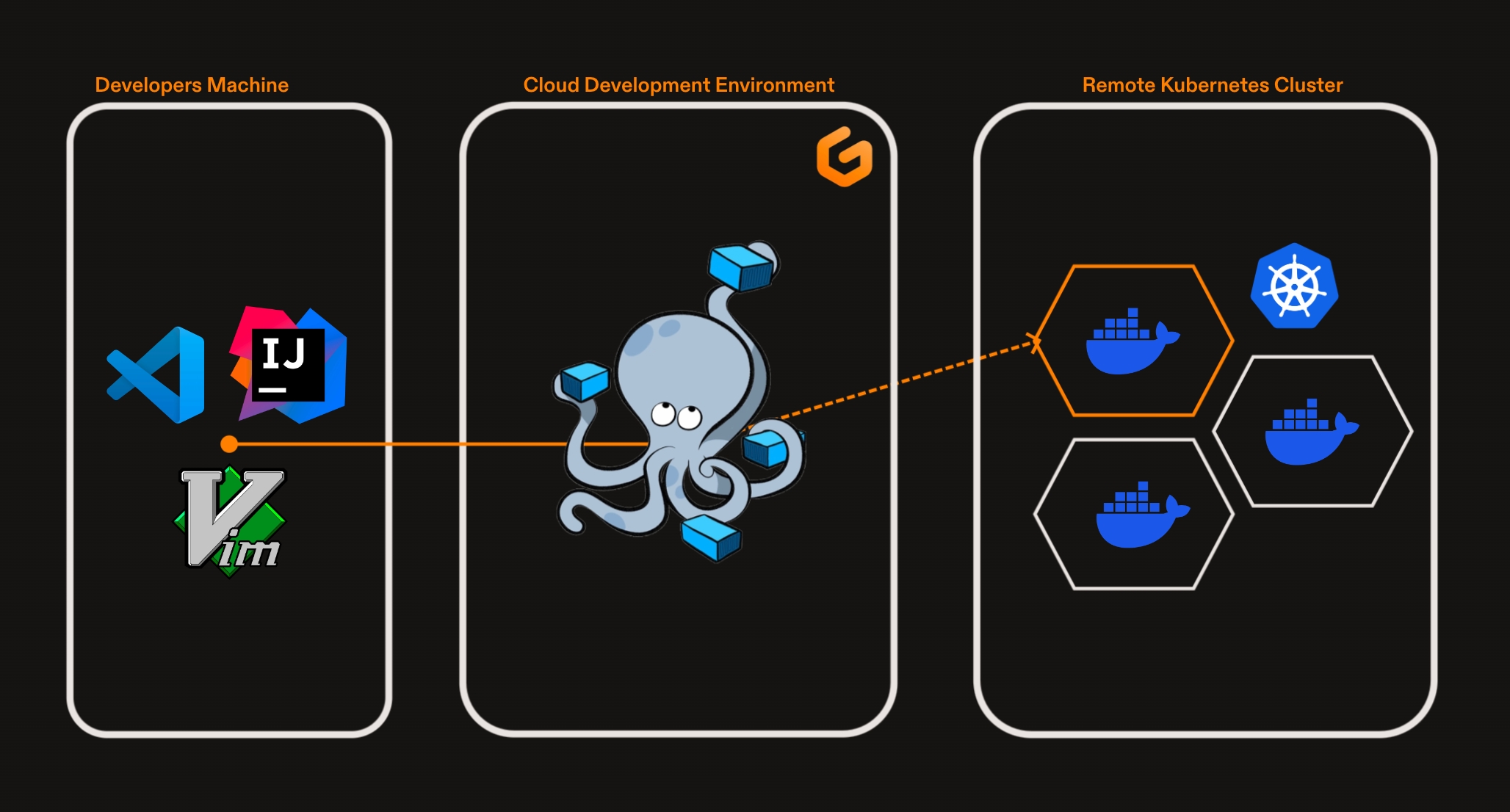

A challenge with many of the above patterns is they almost all require sophisticated CLIs that developers must download and configure. Developers have to configure their authentication, or configure secrets on their machine. Whilst these patterns reduce some complexity in some ways, by aligning configuration files, these patterns can increase complexity in other areas, by exposing developers to the Kubernetes terminology configuration and debugging ecosystem. But this is where Cloud Development Environments (CDEs) come into the picture.

CDEs are pre-configured development environments with all the tools, libraries and dependencies required for running an application. Gitpod is a CDE solution and through CDEs you can automate many of the developer experience challenges that come with Kubernetes, like laptop resource constraints, compatibility with operating systems for running clusters locally, or configuring tools like telepresence or remote cluster syncing.

CDEs can help you automate the installation of necessary packages and create a ready-to-code experience for developers. Instead of asking developers to install specific tools, understand Docker and Linux inside out, or configure secrets on their machine, you can provide an environment definition that comes packaged and ready to go.

There are a few advantages of cloud development environments, when compared to entirely developing in a remote cluster. Firstly, developers can continue to use the tools that they are familiar with, such as Docker and Docker Compose, with the benefit of having workspace sizing as large as necessary. Your docker-compose or your manifest files can be a source of truth. A Cloud Development Environment can be a great compliement to the above patterns.

Benefits

- Don’t need to support many operating systems.

- Continue to use tools like Docker and Docker compose flows.

- Developer tools such as Telepresence can be pre-configured in workspaces.

- Kubernetes tools like

kubectlcan be pre-installed in workspaces. - Secrets access can be automated for developers in their environment.

- No limitations on resources, unlike with a laptop or local machine.

Limitations

- Increased cloud spend when compared to an entirely local solution.

- Not opinionated about docker, telepresence, etc.

Which patterns are right for your developer experience?

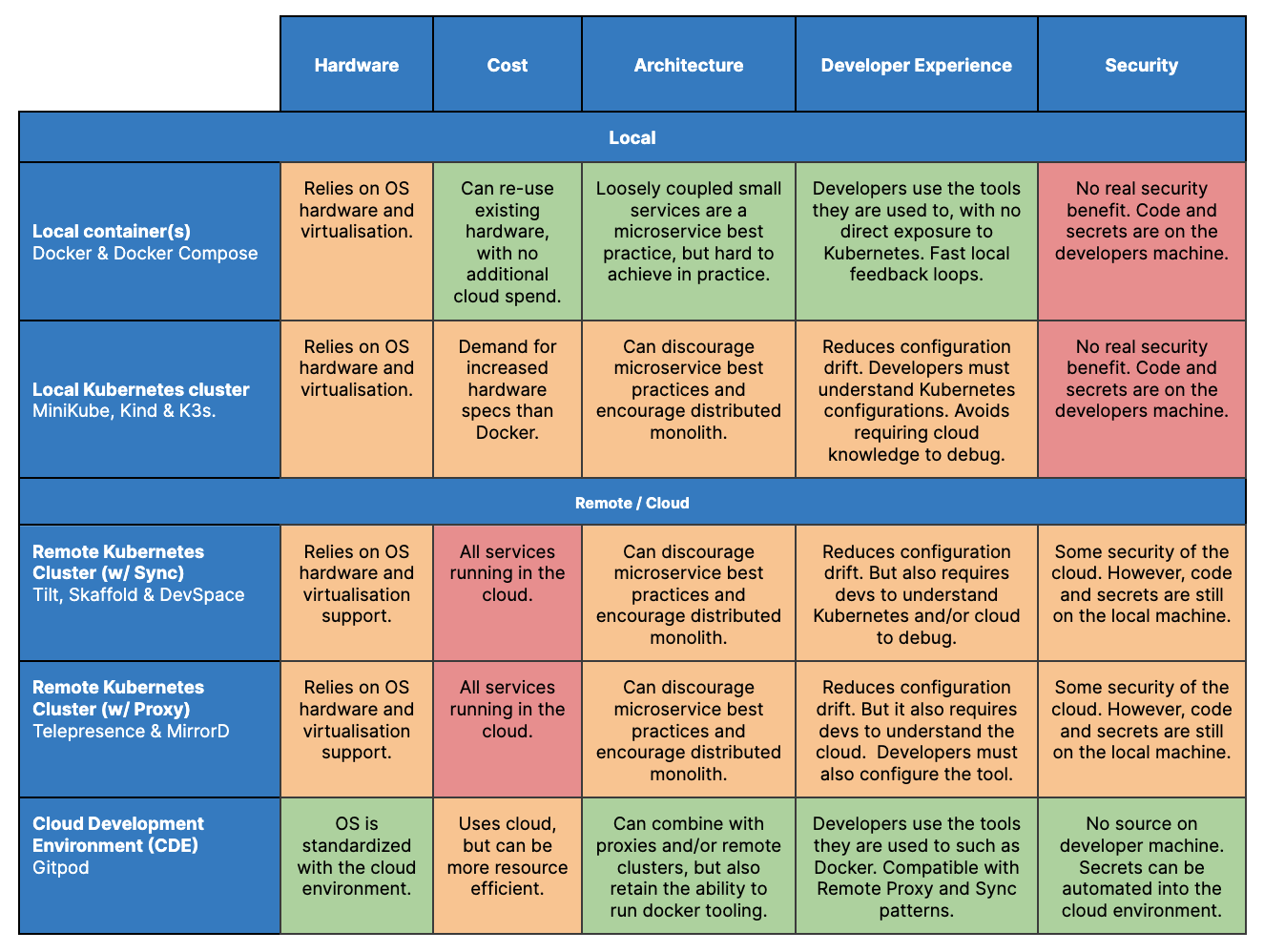

The patterns you adopt will always depend on your unique context, are often complimentary, and can be used in conjunction with each other. Here are some aspects you’ll want to consider as you evaluate using one of these patterns:

- Hardware: Do you have operating system requirements?

- Cost: Are there associated cloud or device costs worth considering?

- Architecture: How ‘best practices friendly’ is the pattern e.g. microservices?

- Developer experience: How much knowledge is required to run the setup?

- Security: Where is source code and secrets stored?

To summarise the patterns, here’s a comparison table:

Hopefully by seeing these options laid out as we covered them today, you can now weigh out the benefits and limitations and tweak each pattern to suit your needs. Kubernetes might not be strongly opinionated about it’s developer experience, but given these patterns, you can form your own opinions about how to build the best developer experience that drives your adoption of Kubernetes.

Read more about Kubernetes integration with Gitpod.